The Ultimate Google Algorithm Cheat Sheet

Have you ever wondered why Google keeps pumping out algorithm updates?

Do you understand what Google algorithm changes are all about?

No SEO or content marketer can accurately predict what any future update will look like. Even some Google employees don’t understand everything that’s happening with the web’s most dominant search engine.

All of this confusion presents a problem for savvy site owners: Since there are about200 Google ranking factors, which ones should you focus your attention on after each major Google algorithm update?

Google has issued four major algorithm updates, named (in chronological order) Panda, Penguin, Hummingbird and Pigeon. In between these major updates, Google engineers also made some algorithm tweaks that weren’t that heavily publicized, but still may have had an impact on your website’s rankings in the search results.

Download this step by step worksheet to protect your website from Google penalties.

Google Algorithm Changes Overview

Before you can fully understand the impact of each individual algorithm update, you need to have a working knowledge of what a search engine algorithm is all about.

The word “algorithm” refers to the logic-based, step-by-step procedure for solving a particular problem. In the case of a search engine, the problem is “how to find the most relevant webpages for this particular set of keywords (or search terms).” The algorithm is how Google finds, ranks and returns the relevant results.

Google is the #1 search engine on the web and it got there because of its focus on delivering the best results for each search. As Ben Gomes, Google’s Vice-President of Engineering, said, “our goal is to get you the exact answer you’re searching for faster.”

From the beginning, in a bid to improve on its ability to return those right answers quickly, Google began updating its search algorithm, which in turn changed – sometimes drastically – the way it delivered relevant results to search users.

As a result of these changes in the algorithm, many sites were penalized with lower rankings, while other sites experienced a surge in organic traffic and improved rankings.

Some brief history: Algorithm changes can be major or minor – mostly minor. In 2014, Google made approximately 500 changes to the algorithm. After each of those tweaks, a large number of sites lost their rankings.

Ten years earlier, in February 2004, Google issued the Brandy update. A major algorithm change, Brandy’s major focal points were increased attention on link anchor texts and something called “Latent Semantic Indexing” – basically, looking at other pages on the same site to evaluate whether they contain the search terms, in addition to the indexed page.

Eventually, Google’s focus shifted to keyword analysis and intent, rather than solely looking at the keyword itself.

Going back even further, Google made a number of changes in 2000, including the launch of the Google toolbar and a significant tweak known as “Google Dance.” However, as far as SEO’s impact on business websites is concerned, those updates didn’t have much impact on search results.

If you want to be up-to-date on these algorithm changes, you can review the entire history of Google’s algorithm changes.

Google needs large volumes of data to be able to make better decisions. The more relevant results people get when they search for a specific keyword, the more accurate the data that Google can extract and return for other searchers.

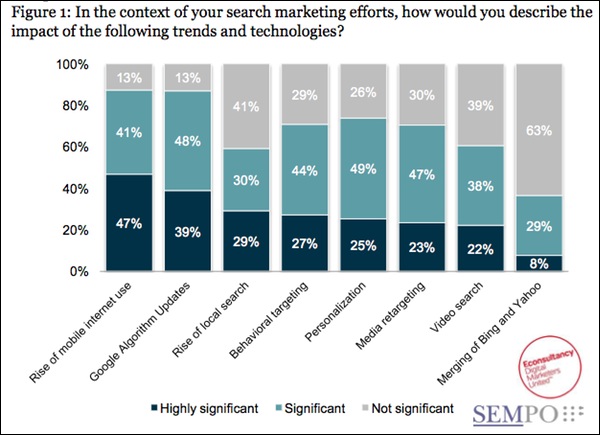

That’s why these changes have also impacted mobile search results. Google’s recent changes, coupled with the explosive growth in mobile device use, have been significant for search marketers.

In this article, we’ll focus on four major Google algorithm changes. Each of these updates had, and continues to have, a significant impact on search engine marketing, on-page SEO and your site’s overall content strategy. Specifically, we’ll discuss:

- The Panda update

- The Penguin update

- The Hummingbird update

- And, the most recent algorithm change, Google Pigeon

The Core SEO Benefits of Google Algorithm Changes

In the last couple of years, we’ve seen the positive effect of Panda, Penguin, Hummingbird and the Pigeon algorithm changes on SEO. Some of these benefits are:

Google’s user-focused commitment – Remember Google’s goal: to help each search user find the correct information they’re looking for as quickly as possible. These updates, especially Panda, further solidify Google’s commitment to their users.

Although there is still a lot more work to be done by Google in improving search results, the odds are good that you’ll get relevant and informative results on the first page of results from Google when you search.

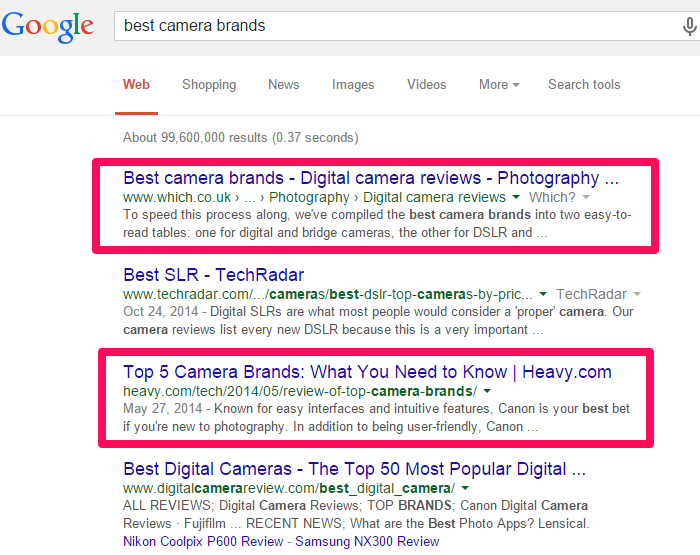

For example, let’s say you search for best camera brands. Your top results will likely include those search terms, or their closest synonyms, in close proximity to each other:

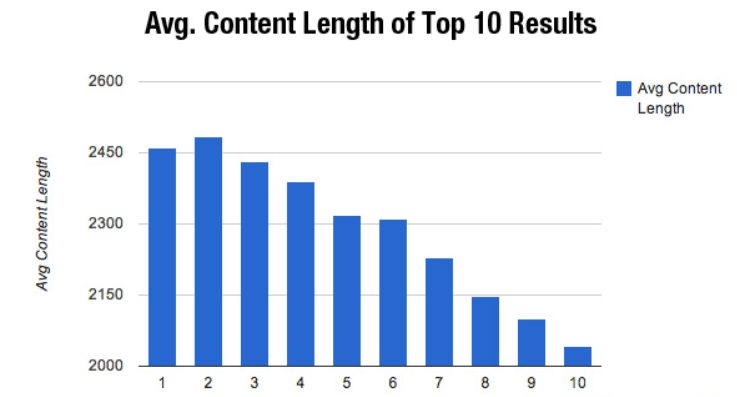

Improved rankings for in-depth content – serpIQ agrees that in modern SEO, “length is strength.” Longer content tends to dominate search engine results pages these days.

Prior to Google Panda, thin content could, and often did, rank highly. Content writers could churn out 300 words per article or blog post, throw in some high PR links and wind up ranked #1 in Google – and remain there for months. Those days are over, so post long content if you want to improve your rankings, says Brian Dean.

Content farms (sites that frequently generate and quickly publish low-quality and thin content) were the major culprits. Sites like EzineArticles, ArticleAlley and Buzzle lost their rankings, even though they had aged domain names. Their content couldn’t provide meaningful, relevant, long-term solutions.

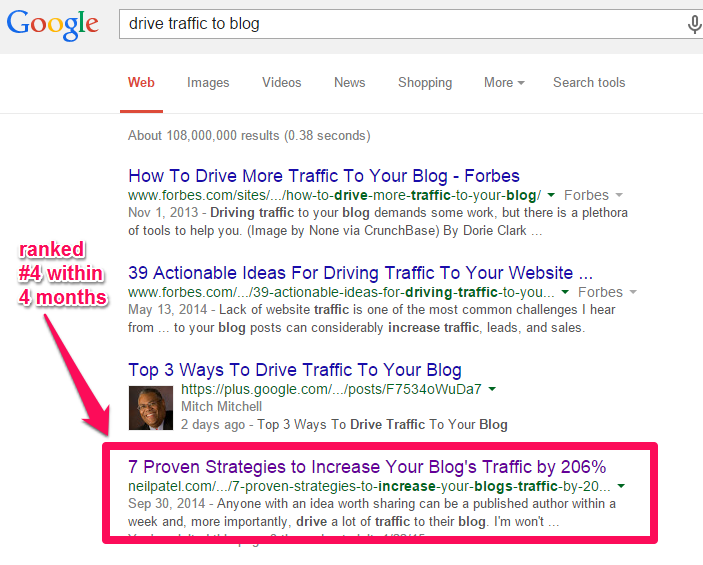

These days, Google gives preference to in-depth pieces of content that are likely to remain useful. For example, one of the articles from this blog is sitting at #4 for an in-demand keyword phrase:

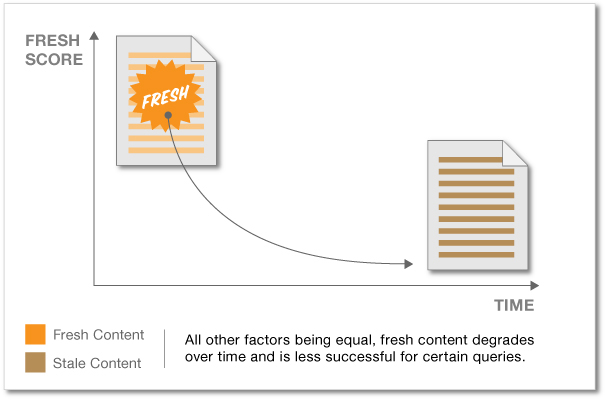

Fresh content advantage – When you publish fresh content on your site, Google gives your webpage a score wow würth download for free. Over time, this freshness score will fizzle out and your site will require more fresh content.

Cyrus Shepard notes that “the freshness score can boost a piece of content for certain search queries,” even though it then degrades over time. The freshness score of your web page is initially assessed when the Google spider crawls and indexes your page.

Therefore, if you’re always updating your blog or site with relevant, well-researched and in-depth (2000+ words) content, you should expect improved rankings and organic visitors from Google. By the same token, sites that publish irregularly or sporadically will find it hard to retain a solid position in Google.

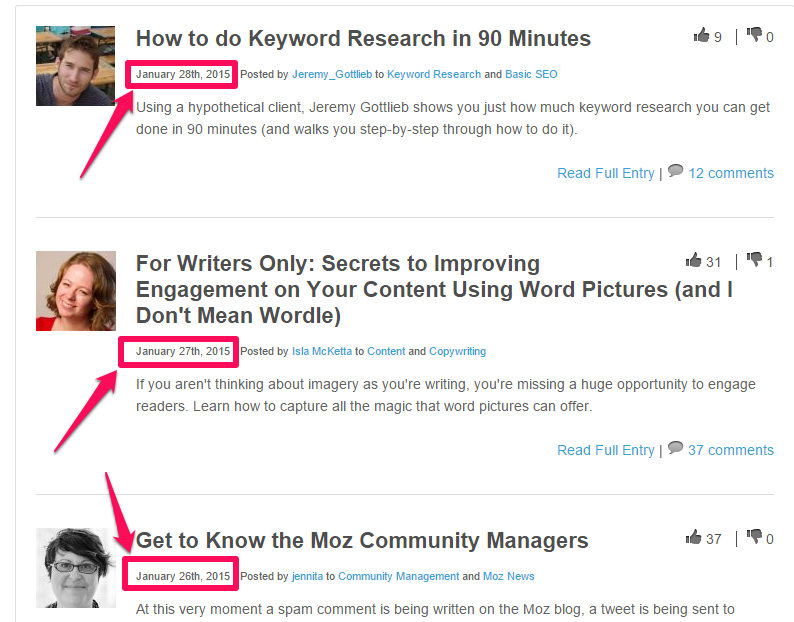

Most of the popular sites post new content at least once a week. Some sites, like Moz, publish every day.

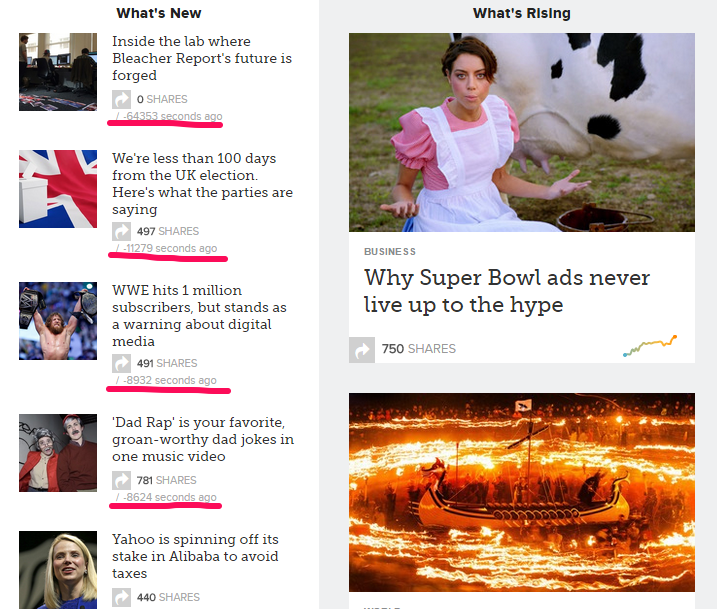

In order to continually boost the freshness score, some popular brands, like Mashable, publish several pieces of detailed content on a daily basis.

Brand awareness – This may not be obvious, but the Google algorithm changes support a shift towards brand-building.

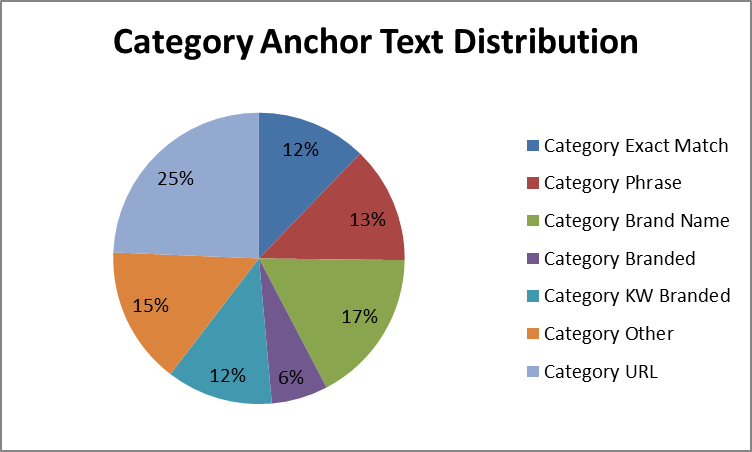

Before Google started penalizing sites that use a lot of keyword-rich anchor text for internal links, over-optimization used to work. But, SEO has evolved and building links shouldn’t be the major focus (even though it’s important). Moz recommends that 17% of your anchor text should be brand names.

Corporate organizations, small business owners and bloggers have become meticulous when using anchor text. Build links that will improve your brand and relevance online and avoid building links to artificially boost your organic rankings.

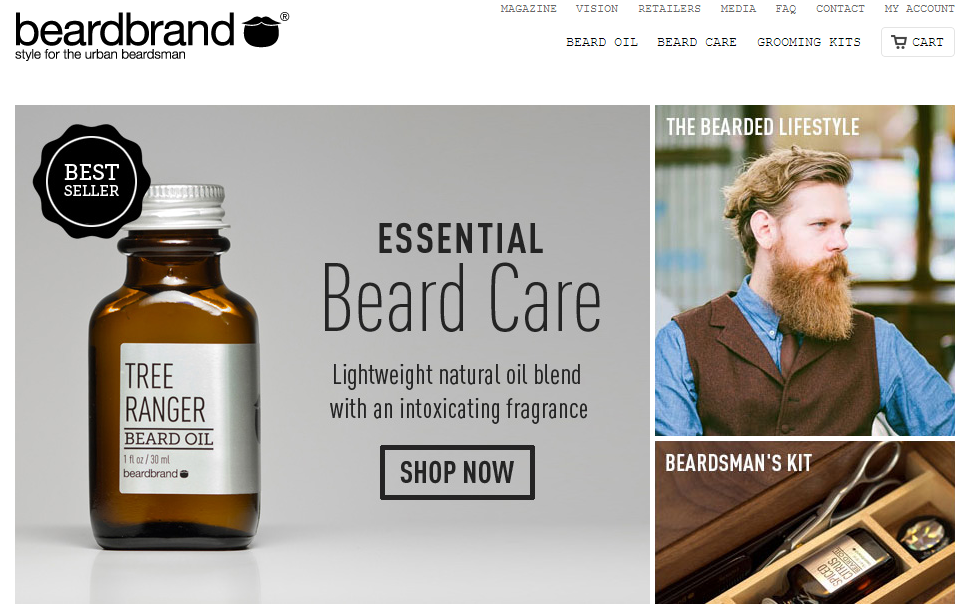

Look at BeardBrand.com. Their major keyword (best beard oil) is currently on the first page of the Google results.

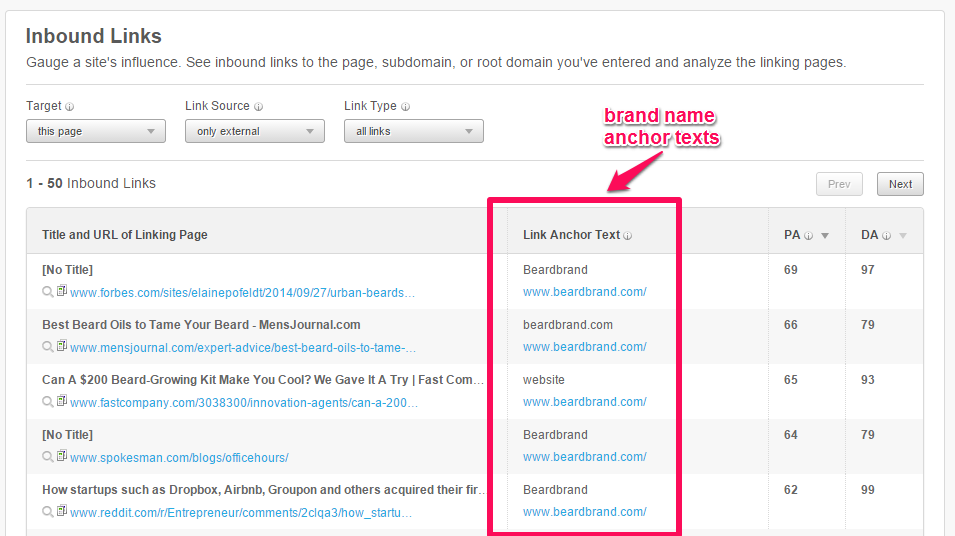

Many of their anchor text phrases contain brand names and domain URLs. This is the result I got from OpenSiteExplorer:

Now, let’s look at the Google algorithm updates in more detail…

Google Panda

You’ve probably heard of Panda. But, unless you’re a veteran SEO expert who daily consumes Google-related news, you may not be intimately familiar with its details.

The Google Panda update revolutionized SEO, prompting every business that relies on Google for lead generation and sales to pay attention.

One important lesson that we’ve learned is that SEO is never constant. It’s continuously evolving and today’s “best practices” can become outdated tomorrow. After all, who would have believed that exact match domain names would ever be penalized by Google?

What Is the Panda update?: Panda was named after the Google Engineer, Biswanath Panda.

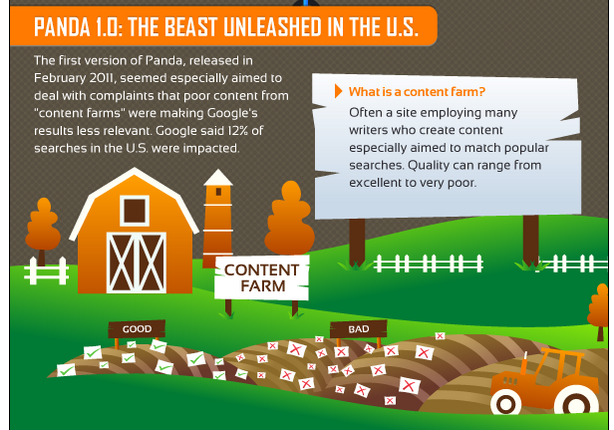

In February 2011, the first search filter that was part of the Panda update was rolled out. It’s basically a content quality filter that was targeted at poor quality and thin sites, in order to prevent them from ranking well in Google’s top search engine results pages (SERPs).

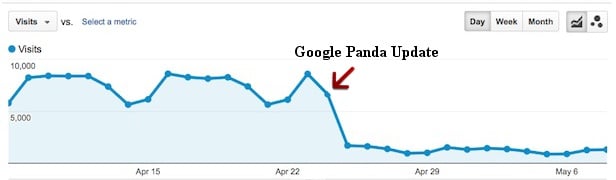

Whenever a major Panda update happened, site owners noticed either a drop in organic traffic and rankings or a boost.

The Panda algorithm update changed the SEO world. It changed content strategy, keyword research and targeting. It even changed how links are built, since high-quality relevant links pointing to a webpage ultimately add to its value.

Google could now determine more accurately which sites were “spammy” and which sites would likely be deemed useful by visitors.

Before Panda, poor content could rank quite highly or even dominate Google’s top results pages. Panda 1.0 was unleashed to fight content farms. Google said the update affected 12% of searches in the U.S.

Note: Panda is called an update because the filter runs periodically. And, every time it runs, the algorithm takes a new shape.

In other words, high quality content will likely bounce back in the search results, while content pages that escaped the previous update get caught in the Panda net.

There has been a Panda update every 1-2 months since 2011, for a total of 26 updates since February 14, 2011. This number may not be precisely accurate, because a lot of minor tweaks most likely have occurred in-between them, but it lies within that range.

1) Panda 1.0 update: The search filter was aimed at content farms — those sites that employ many writers who create poor quality content around specific keywords in a bid to rank in Google’s top ten results. This update was primarily aimed at U.S. sites and affected 12% of search results.

However, this doesn’t mean that all multi-author sites are spammy. For example, Moz has hundreds of writers, but it still enjoys top rankings in Google, because it makes sure that the content delivers plenty of value to readers windows 7 ohne key herunterladen.

In other words, content that is well researched, in-depth and that gets shared on Facebook, Twitter, Pinterest, Google+ and the other major social platforms continues to rank well – perhaps even more highly than before.

2) Panda 2.0 update: This update, released in April 2011, was targeted at international search queries, though it also impacted 2% of U.S. search queries.

This filter also affected international queries on google.co.uk and google.com.au and English queries in non-English speaking countries e.g. google.fr, google.cn, when the searchers chose English results.

Amit Singhal, who is in charge of search quality at Google, told Vanessa Fox that Google was “focused on showing users the highest quality, most relevant pages on the web.”

3) Panda 2.1 – 2.4: There were minor updates in May, June and July 2011 (dates approximate), in which Google incorporated more signals to help gauge the quality of a site.

Low quality content and pages were further penalized, while those who worked extremely hard at producing rich and interesting content saw a boost in organic traffic.

Panda 2.3 was minor and focused on the user’s experience. Bloggers and site owners who wrote and published content that engaged the end-user and enhanced site navigability benefited from these changes.

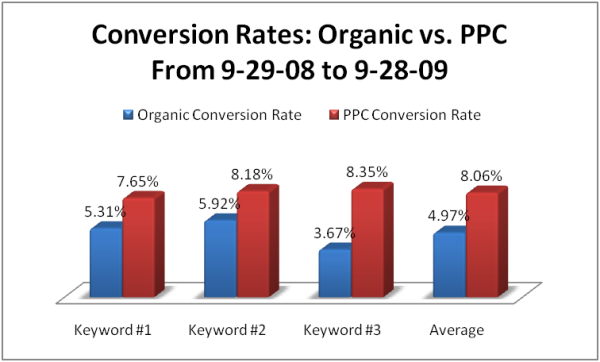

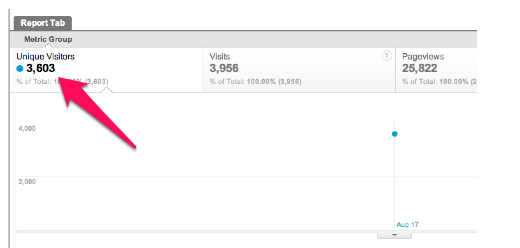

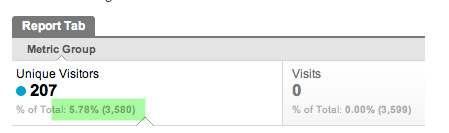

4) Panda 2.4: This update was rolled out in August 12th, 2011 and affected about 6-9% of user queries. It was focused on improving site conversion rates and site engagement, says Michael Whitaker.

Prior to the update, Michael Whitaker was getting up to 3,000 unique visitors to his site.

As soon as the Panda 2.4 update was rolled out, his monthly site traffic dropped to 207.

5) Panda 3.0: This search filter began to roll out on October 17, 2011 and was officially announced on October 21, 2011. This update brought large sites higher up in the SERPs – e.g., FoxNews, Android.

After Panda 2.5, Google began to update their algorithm more frequently. This term is called “Panda Flux”.

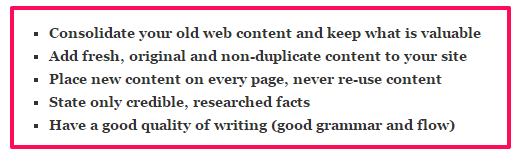

To be on the safe side with your content, Express Writers recommends the following steps:

6) Panda 3.1 went live on November 18, 2011. It was minor and affected about 1% of all search queries. Although this number seems low, it is still significant, considering the number of searches conducted every day.

Each of these algorithm changes came about as a result of search users not getting relevant and useful information. As an advertising media company, Google wants to make money and unless they satisfy searchers, how would they do that?

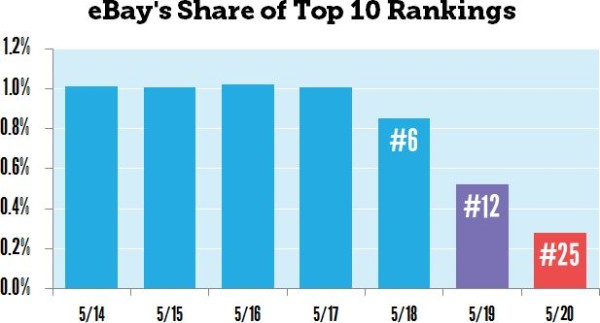

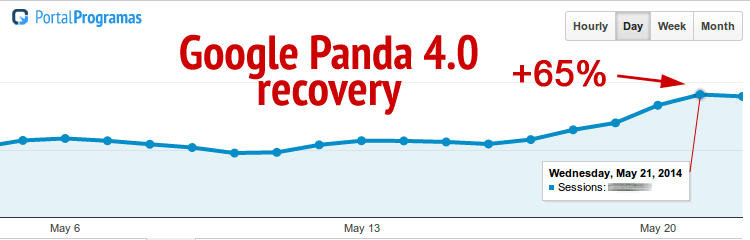

7) Panda 4.0: On May 20, 2014, Matt Cutts tweeted that Google was rolling out Panda 4.0. It was the next generation of Panda and it generated both winners and losers.

This update had a big impact. Ebay lost a significant percentage of the top 10 rankings it had previously enjoyed.

Panda 4.0 was primarily targeted at larger sites that have been dominating the top 10 results for seed keywords.

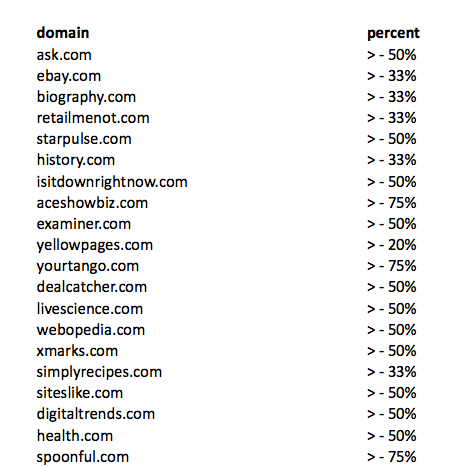

When you build a site today, you have to consistently write and publish in-depth content. This content must add value, be interesting to the reader and solve a definite problem. If you fail to do that, you won’t engage readers and the conversion rate will be low. The major losers after Panda 4.0 rolled out were:

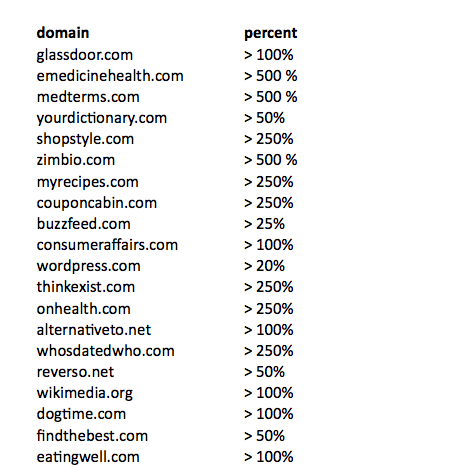

As with every Panda update, there are winners too. Here’s a list provided by Search Engine Land:

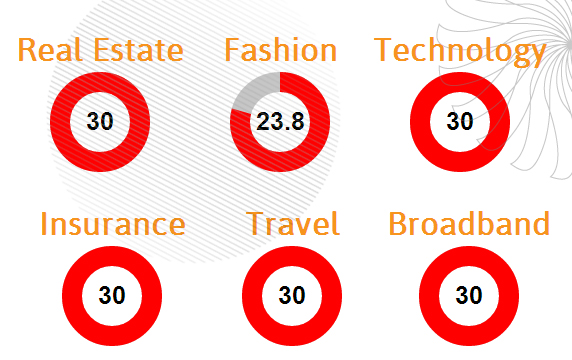

Overall, not every sector or industry lost in Panda 4.0. The major ones worth noting were:

Factors that lead to a Panda penalty (and what to do about them): Since some sites experienced a boost in organic rankings and traffic, it’s worth asking: what makes a site vulnerable to a Panda attack? Here are six factors that may be to blame, along with suggestions as to how to fix the underlying problem and get back in Google’s good graces:

Duplicate content – Do you have substantial blocks of content that are the same on your site? This can cause a lot of problems, especially where SEO is concerned, but for site visitors, too.

In this video, Matt Cutts explains that duplicate content per se may not affect your site, except when it’s spammy.

It’s not advisable to redirect duplicate pages. When the Google spider discovers duplicate content on your site, it will first analyze other elements that make up your web page, before penalizing you herunterladen.

Thus, it’s recommended that you completely avoid any form of content duplication and focus on publishing unique, helpful and rich content. Don’t be deceived: there isno balance between original and duplicate content.

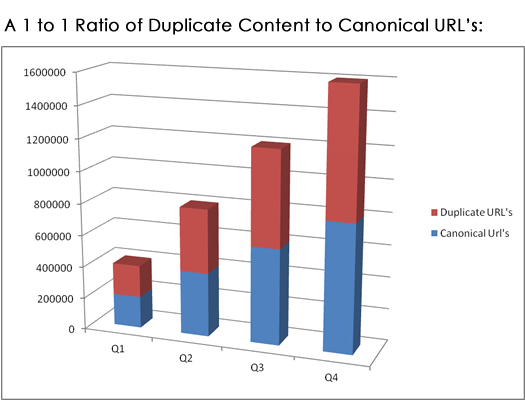

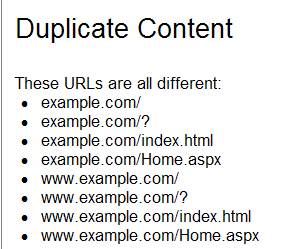

When the same content appears across your domain names and URLs, it could trigger a problem.

Some time ago, Greg Gothaus explained why this is a problem, by sharing the image below. Since the content is the same, though the URLs are slightly different, Google “thinks” you have duplicate content.

Fortunately, finding duplicate content on your site or blog is fairly easy to do. Simply follow these steps:

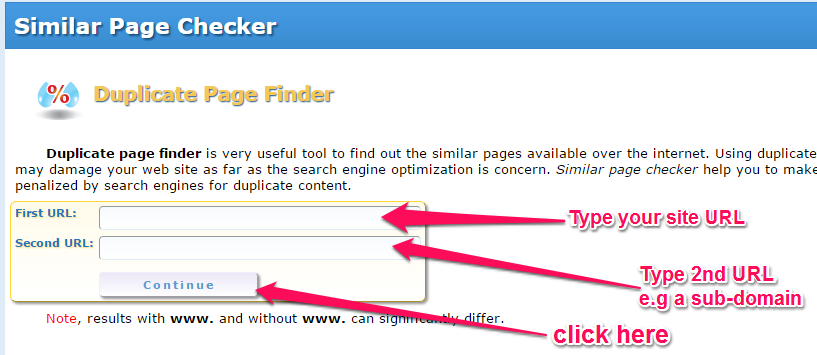

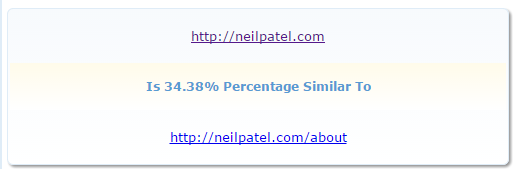

Step #1: Visit the Duplicate Page Finder tool. Input your site URL. Input another URL that you want to compare for duplicate content – e.g., yoursite.com/about.

Step #2: Analyze your results. If the results show that your pages contain some duplicate content, you can then solve the problem in one of two ways: either (1) revise one of the pages so that each page contains 100% original content, or (2) just add a no-index tag, so that the Google spider will ignore it and not index or pass link juice to it.

Another problem that can trip you up is where your content is duplicated on someone else’s site. To find duplicate content outside your site, follow these steps:

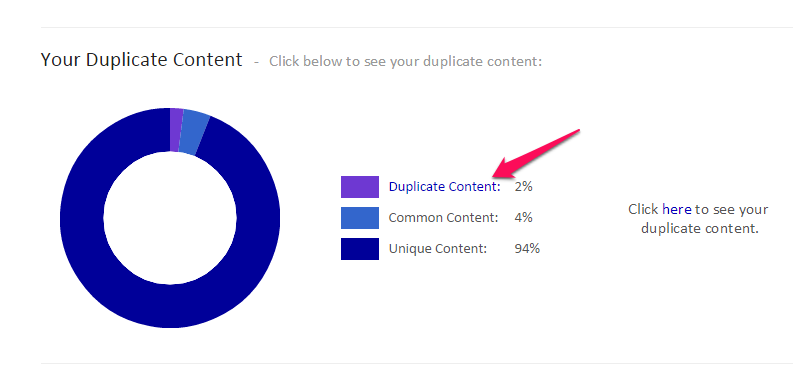

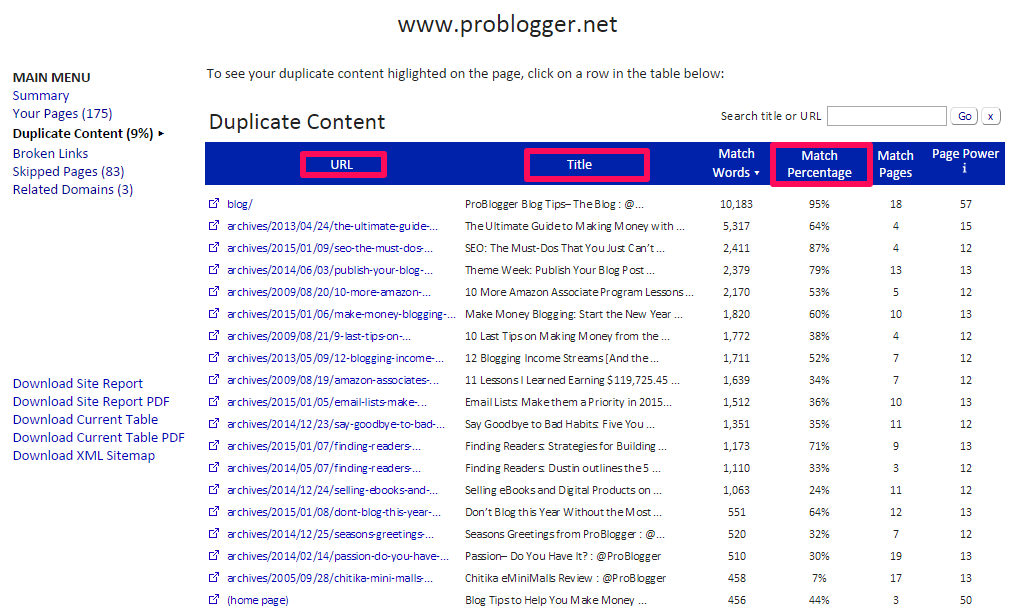

Step #1: Go to Siteliner.com. Plug in your site URL and hit the “Go” button.

Step #2: Analyze duplicate content pages. On the results page, scroll down and you’ll find the “duplicate content” results.

Once you’ve clicked the “duplicate content” link, you’ll get a list of all of the pages that you need to make unique and will be told by how much.

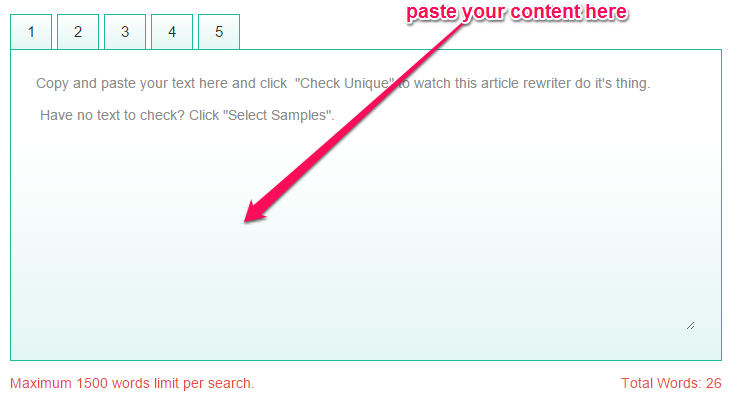

If you accept guest posts or sponsored articles on your blog, you should make it a habit to check for duplicate content first. The moment you receive the content, quickly run it through any one of the many duplicate content/plagiarism checker tools available on the web – e.g., smallseotools.com.

After running your content through the tool, you’ll see an analysis of the piece’s unique vs. duplicate phrases, sentences and paragraphs.

Note: Looking at the screenshot above, we see that the results specify 92% of the content is unique. That’s a fairly decent score and one you can live with.

However, if the piece you’re checking scores between 10 to 70% unique content, you should either improve it or take it down. Excessive duplicate content can result in a Google penalty for your site when the next Panda update is rolled out.

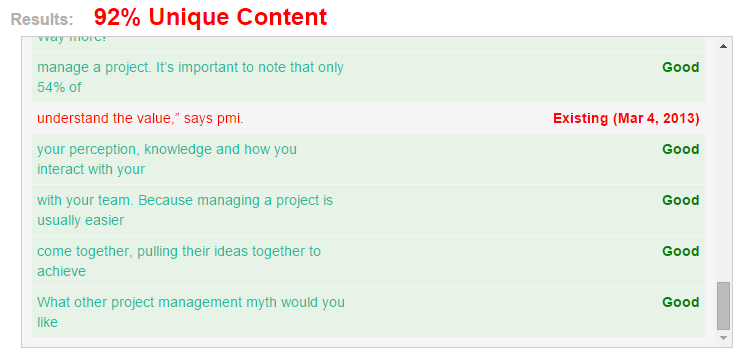

It’s also very important to note that just because you have 100% unique content on your site, that doesn’t automatically mean higher rankings for you. As Frank Tankington rightly noted, “If there are five articles that are very similar, no matter what article was written first, the one with the most backlinks will sit on top of the SERPs.”

However, it’s no longer about having the “most” backlinks. In this post Penguin era, it’s also about having trusted and relevant backlinks.

Low quality inbound links – If you’ve discovered that your SEO is tanking and you’re confident that your site content is both useful and unique, the next step is to audit your inbound links.

Low quality links pointing to your pages can also trip you up, because Penguin 2.1 was all about putting the search engine focus on quality over quantity.

According to Kristi Hines, your past bad content can come back to haunt you. So, it’s not enough to begin building good, high quality links today. The ones that you built when you first started marketing can also have an effect on your rankings.

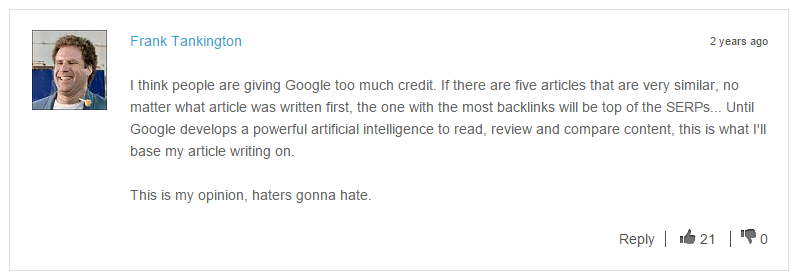

The ugly truth is this: If your site has a large number of inbound links coming from irrelevant sites (i.e., content farms or sites in a different niche altogether), your chances of getting a Google penalty increase.

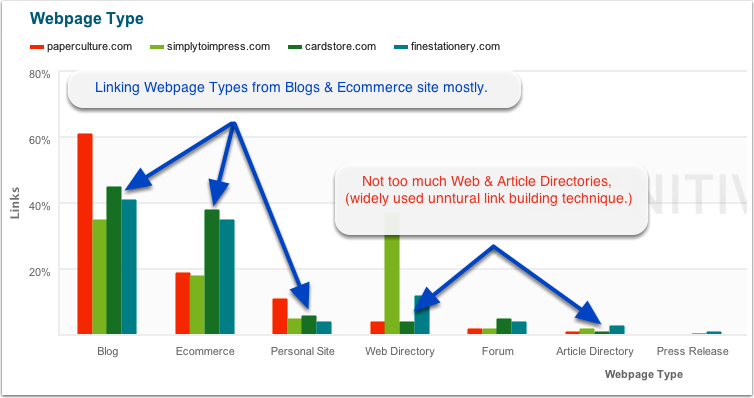

In this Penguin era, you need to focus on getting incoming links, predominantly from sites that have the same theme or subject as your site. The SEM Blog chart below gives us a clearer picture.

But, before you can do anything about those low-quality links, you first need to find them. In other words, you need to find out how many backlinks you have built or gained over the course of your site’s existence. Fortunately, this is fairly simple. Just follow these steps:

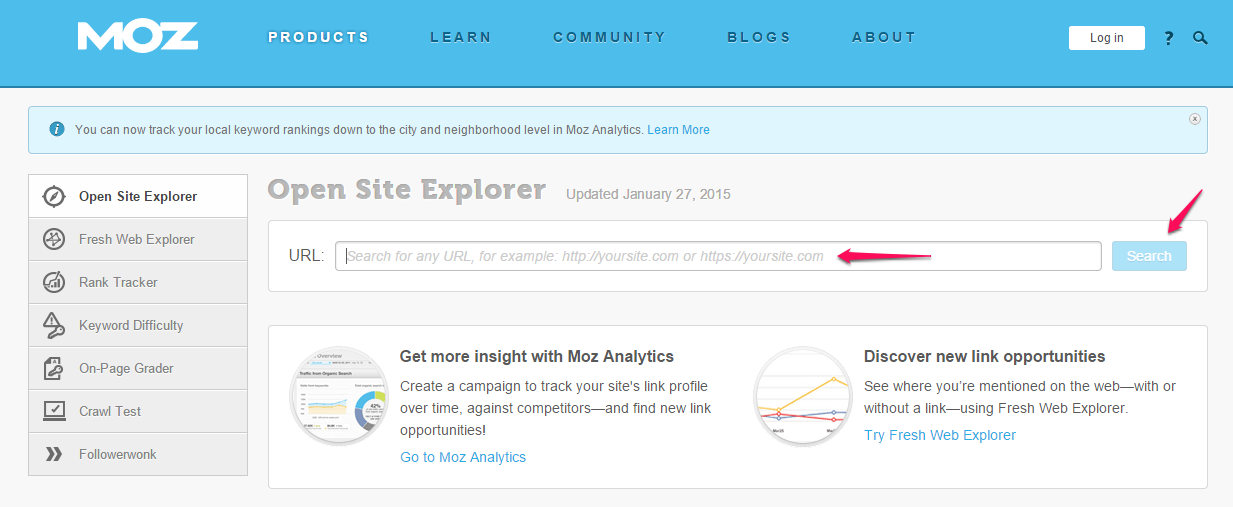

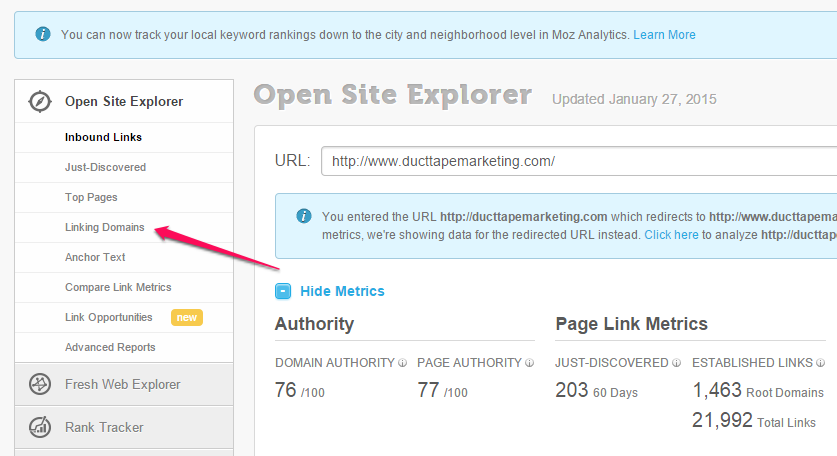

Step #1: Go to OpenSiteExplorer. Type in your site URL herunterladen. Hit the “Search” button.

Step #2: Click on “Linking Domains.”

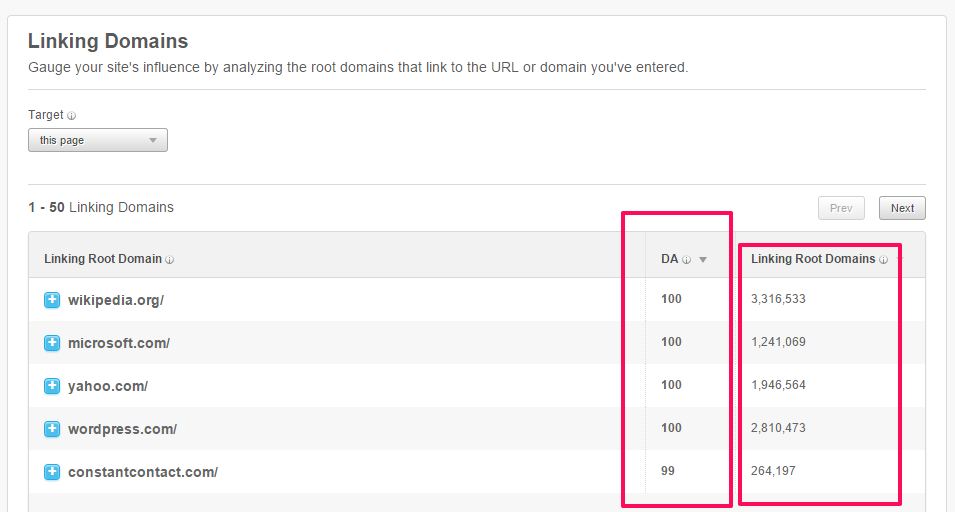

Step #3: Analyze the linking domains.

You can find toxic links on your own page in several places. Links in your page footer, site wide links and links with over-optimized anchor text, among others, are all good places to start.

If you need more information about remedying either of these first two Panda-penalty factors – duplicate content and low quality inbound links – try these helpful articles:

- How To Identify and Remedy and Duplicate Content Issues on Your Website

- How To Find Low Quality Inbound Links with Buzzstream

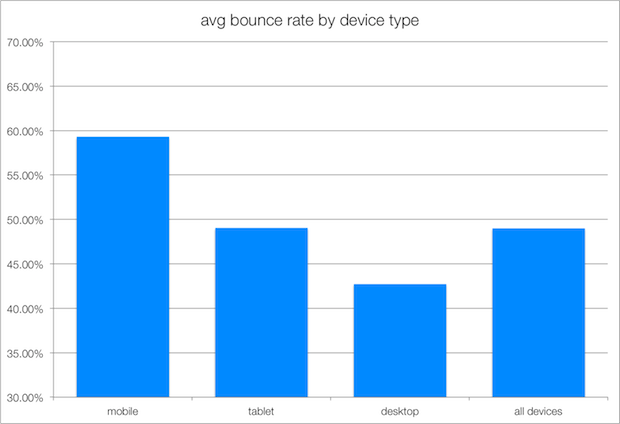

High bounce rate – The term “bounce rate” simply refers to how many single-page sessions your site receives. A single-page session is when someone visits a page on your site and then leaves your site altogether, without interacting (clicking other links or reading more of your content) further with your site.

A high bounce rate can signal to Google that your visitors aren’t finding what they’re looking for on your site or that they don’t consider your site to be terribly useful.

What’s an acceptable or average bounce rate? The answer will vary depending on your industry. What truly matters is that your conversion rates are increasing. Here is the average bounce rate by industry.

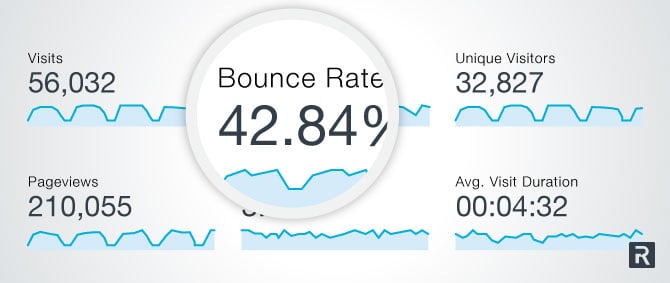

It’s absolutely possible to reduce your bounce rate and therefore reverse that trend. For instance, Recruiting.com reduced their bounce rate to 42.84%.

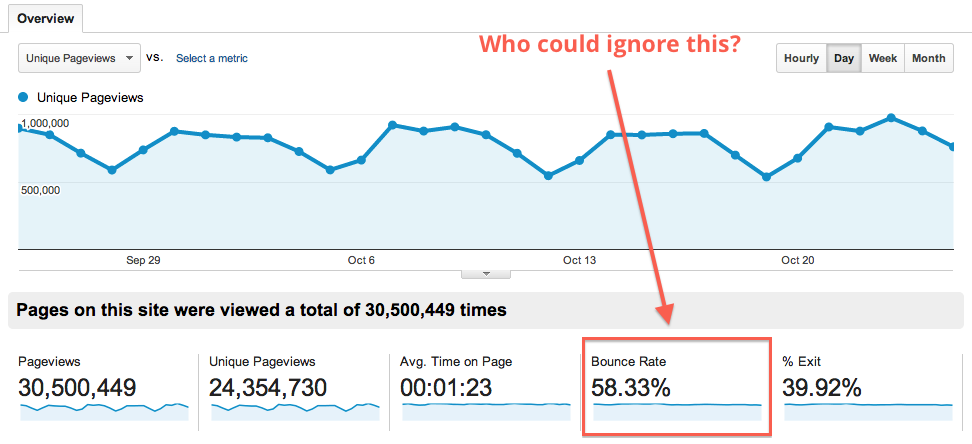

As a rule of thumb, if your bounce rate is above 60%, then you will probably want to work on reducing that number. The bounce rate below is fair. How does yours compare?

Site design and usability are the basic factors that affect your bounce rate. Remember, if users can’t easily find what they’re looking for on your page, Google assumes that your content is not useful. This is because the spider literally follows people.

When you engage your blog readers, they in turn engage with your site, which thenlowers your bounce rate and gives your site a higher ranking score.

Bounce rates can fluctuate significantly over time and a bounce rate that jumps up a bit isn’t necessarily always a bad thing. It could be caused by a major tweak on your site. For example, when you redesign your blog, your bounce rate will likely increase a bit, temporarily, while visitors get accustomed to the new look and layout.

Low repeat site visits – If your site’s visitors only come to your site once and never to return, Google can take that fact to mean that your site isn’t all that relevant or useful.

It’s a good idea to pay attention to your repeat visitor statistics. Once you’ve logged into your Google Analytics account, locate your repeat visitor statistics and then compare that number to previous months.

To adjust a low repeat-visit number, reward visitors when they come back to your site after an initial visit. You can do this by offering a piece of useful content, such as a free valuable report or with gifts such as free access to an insider event or e-course. Additionally, look for ways that you can enhance the return-visit experience and satisfy your visitors who come back for more.

As an example, Amazon has one of the highest repeat-visitor rates among shopping sites. When a visitor comes to Amazon the first time and browses, or looks at different products, Amazon will automatically tracks the user’s movements. When the visitor returns, Amazon serves up the same or similar products.

Amazon also makes excellent use of an upsell strategy to persuade people to buy their products. This makes it easier for shoppers to find the exact product that they want to buy and encourages them to place their order instantly.

If your site doesn’t retain visitors and make them want to come back again, this could negatively impact your ranking.

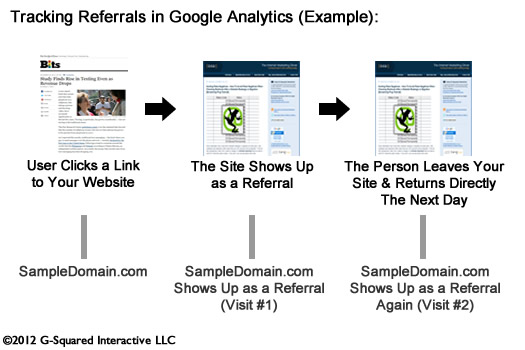

In your Google Analytics account, you should also pay attention to the sources of your traffic. Many domains might be referring visitors to you, but some may be sending you more visitors who make return visits to your site.

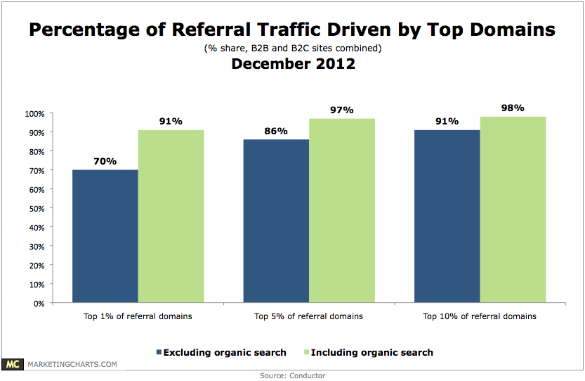

Focus on retention. Organic referral sources tend to be more reliable, in this regard, than social media sources.

Better Biz carried out a three-month study of both B2B and B2C websites to determine the best sources for targeted web traffic. Here’s what they found:

How can you get more repeat visitors to your site? Here are a few simple ideas that can help:

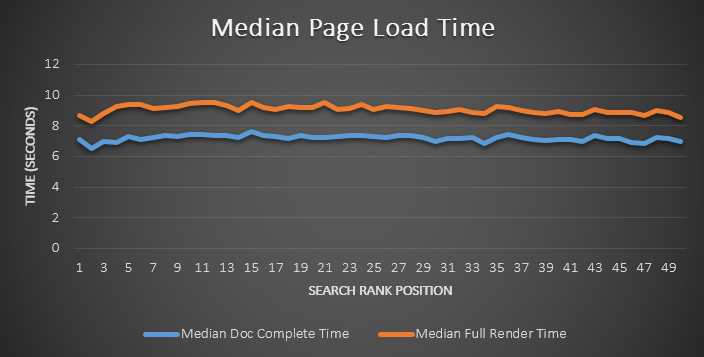

a). Decrease site load time – If you want to capture more repeat visitors, see if you can boost your site’s speed, especially if it’s on the slow side. According to Moz, “site speed actually affects search rankings.” Make your site load faster and your visitors will stay longer (and be more likely to come back in the future).

Although the direct impact on search rankings may not be terribly significant, as you’ll see below, it’s absolutely true that fast-loading sites create a better user experienceand thus improve the perceived value of your site.

Here’s an interesting statistic: 40% of people abandon a website that takes more than 3 seconds to load. A slow-to-load page can result in a higher bounce rate, as well as a lower repeat-visitors rate.

When I discovered that both Google and my readers love sites that load very fast, I plunged into the topic. My efforts resulted in taking my site from a load time of 1.9 to 1.21 seconds steam profile image. In turn, this increased the direct traffic coming to my blog to 2,000+ per day.

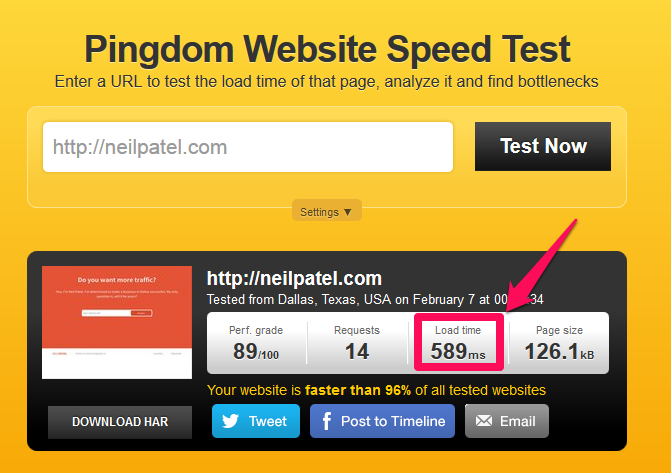

Here’s my initial load time, before optimization:

And, here’s the result, after the guys at StudioPress reworked the code:

Start improving your site load time today. You can follow this step-by-step guide.

b). Be Helpful – One of the five ways to get repeat visitors is to help people. Your content should be able to solve a definite problem. For example, you could write a step-by-step tutorial on any topic relevant to your site’s niche.

So, if you regularly write about SEO or internet marketing-related topics, you could provide a detailed Google Analytics tutorial guide, accompanied by explanatory screenshots. This guide would be a great example of targeted problem-solving content, since a lot of people struggle to understand GA.

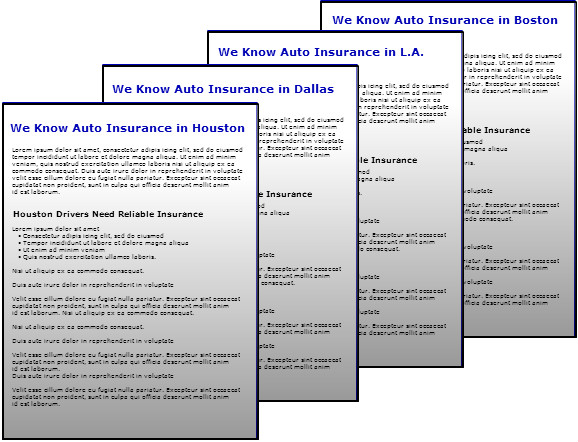

High percentage of boilerplate content – Boilerplate content refers to the content that you reuse on your site. For instance, a particular paragraph in a useful article might be reused in a few places on your site. One or two such paragraphs probably won’t do much harm. But, if the overall percentage of boilerplate content gets too high, your site could fall in the rankings.

As a general rule, avoid using the same or very similar content on more than one page on your site. Focus on unique content – that’s the best way to improve your rankings.

One kind of boilerplate that occurs frequently is “hidden content.” When you display a certain page to users and get other pages to be crawlable by Google, Google sees it as boilerplate content. Too much of that and your site could be penalized.

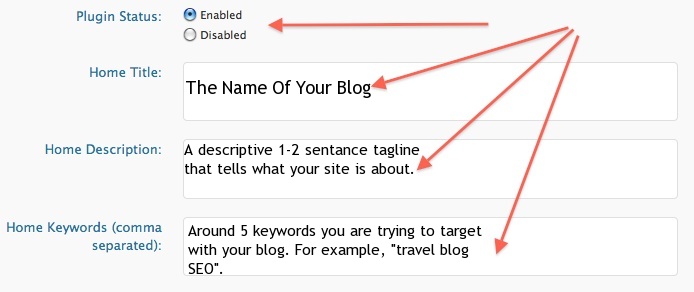

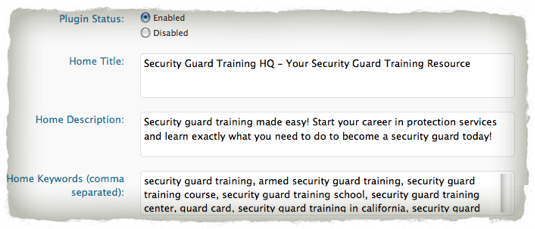

Irrelevant meta tags – It’s very important to set up meta tags precisely and accurately, because irrelevant meta tags will increase your risk of getting a Google penalty. Here’s how to add relevant meta tags to your site’s pages.

Remember, meta tags consist of the title, the description and the keywords.

If you’ve installed the All in One SEO plugin, you’ll find it’s easy to set up your meta tags within three minutes or less. Remember that Google Panda doesn’t like duplicate pages. Travel Blog Advice shows how simple it is to set up the plugin.

In the image below, you’ll see how Pat Flynn’s meta tags are specific and relevant to the page in question. However, in modern SEO practice, it’s not advisable to have a lot of keywords, even if they’re all relevant.

Note: Avoid excessive keyword placement, otherwise known as keyword stuffing. According to David Amerland, this can lead to a penalty for your site, when Panda updates are released.

How to recover from a Panda hit – How do you identify and recover your site from a Panda penalty? If your website or blog was hit by Panda, your next step is to figure out a plan to remedy the problem or problems. This can be difficult; there are many articles and blog posts online that discuss the theory behind the penalties, but provide no actionable steps to take to fix the problem.

By taking action, Portal Programas recovered 65% of their web traffic after the Panda 4.0 update. And, they did it by following a simple plan that focused on user experience.

If you see a drop in organic traffic and rankings following a Panda update, you can be fairly sure you’ve been penalized by Google.

For Panda, you want to avoid showing people thin pieces of content on your site’s pages. Either beef up thin content or remove it from your site, especially on the archive pages that have 10 – 100 words.

Rewriting your content is a another simple way to remove the penalty from your site, transforming it into a high quality site in Google’s eyes. Eric Enge, Stone Temple Consulting President, told Search Engine Watch that one of his clients saw a 700% recovery by rewriting and adding content to their site.

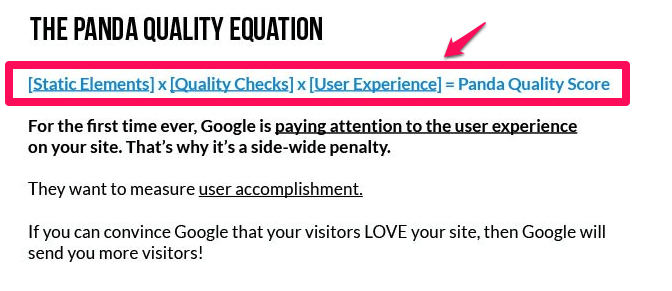

Improve Panda Quality Score – In addition to removing thin content pages or adding more content to make them more detailed and helpful, you should also pay attention to your Panda quality score.

Follow this Panda quality equation to obtain a higher score:

- Static Elements

- Quality Checks

- User Experience

The equation above came from Google, so we can trust it to help us recover from a Panda penalty. Let’s explore each of the items:

1) Static Elements – Every site should have static elements or pages that state what the site does, who is behind it and any applicable terms of service. The static elements are usually: Privacy Policy, Contact, About and Terms of Service.

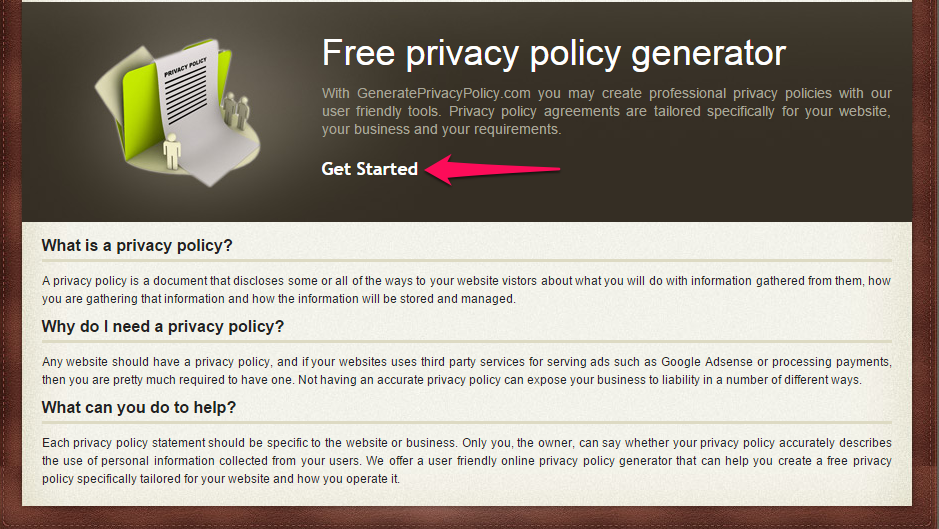

i). Privacy Policy – Most Privacy Policy content is regurgitated or generated with a third-party tool. You can always add a no-index or no-follow tag to this page’s <head> HTML element.

But, Google still prefers that you make this page unique. Avoid copying and pasting from other sources, as Google considers this to be duplicate content.

If you use a third-party tool, personalize and rewrite the content. After generating your privacy policy content, you can rewrite it. One good premium (paid) service to consider is MyPrivacyPolicy spiele kostenlos downloaden moorhuhn.

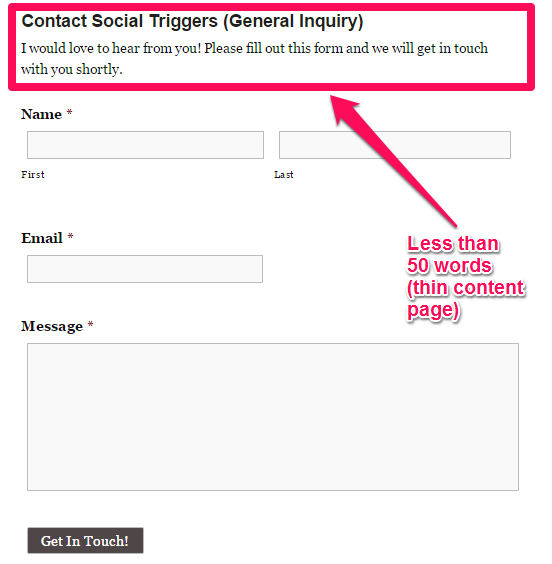

ii). Contact – Another static element on your site is the “contact” page. It’s usually thin, with less than 100 words of content.

It’s important you either no-index tag this page or add more content below the contact form or address, as Googlebots crawl and store home and office addresses, emails, authors, phone numbers, etc.

Note: Google has made it very clear that “if only one or two pages on your site are high quality, while the rest are considered low quality, then Google will determine that your site is low quality.”

Every page counts, so strive to make your static elements (contact page, About page, etc.) rich, unique and helpful.

iii). About – Your “About Page” is another important static element that can lift or lower your Panda quality score. Do you know How to Write the Perfect About Page?

In a nutshell, you want to write unique and valuable content for this page, just as with all your site’s pages. Tell a story to captivate your audience and provide a clear call-to-action.

An “About” page with only a few sentences can lead Google to assume that your entire site is low quality. Take the opportunity to update your page – after all, it’s your story, your experience and your pains and gains.

iv). Terms of Service – Although most visitors won’t even click this page to read its content, it’s important you make it unique and Google-friendly. If you’re a blogger, adding this page to your blog is optional. However, if your site is an ecommerce or services company site, you’ll want to make sure that you have this covered.

The same rules that you followed when creating your “privacy policy” content also apply here. Try to craft a unique TOS page. Make sure it’s in-depth (700 – 1000 words) and, as far as possible, interesting to read.

2) Quality Checks – In the Panda Quality Equation we considered earlier, one of the factors that can help recover your site from a Panda penalty is a solid Quality Check. In other words, the site code needs to be excellent and should meet current standards.

Unmatched HTML tags, PHP errors, broken JavaScript and improper CSS rules can all result in a poor user experience. We know Google values a great user experience, because it helps Google measure your site’s engagement.

If your site was built using older versions of HTML, you’ll want to consider upgrading the site using HTML5. Make sure that your meta description and title tags are unique and contain the relevant keywords that you’re targeting.

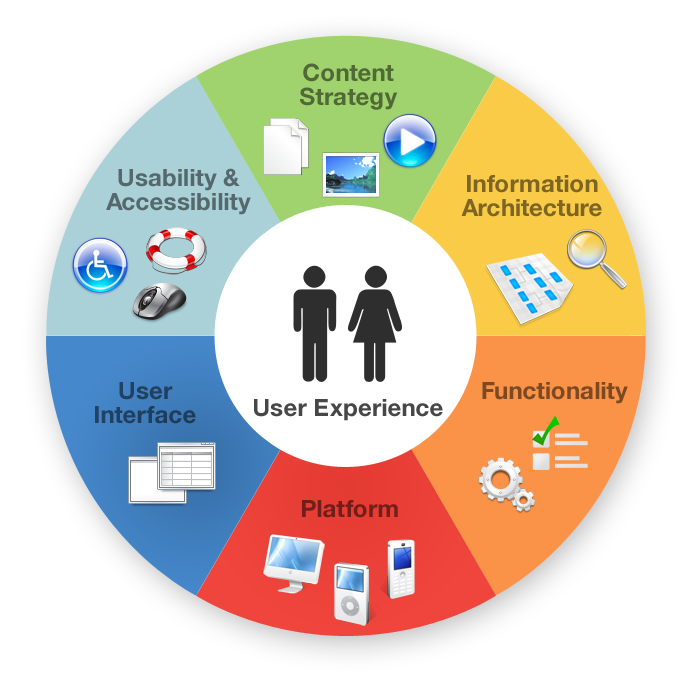

3) User Experience – Since Google Panda is a site-wide penalty that scores your entire site, you’ll want to focus on improving your site’s user experience. Remember: user experience is about users. When you improve your customer experience, you’ll recover from a Panda hit.

As you map user experience, check to make sure that all of these factors are addressed:

If your analysis shows a need to improve your site’s user experience, consider these questions to help get you started:

i) Do you provide any point of good user experience? If you sell a product or service, how do your visitors or prospects receive it?

ii) Does your content solve a particular problem? Your users will exhibit how satisfied they are with your site through their engagement with your content. Do they stay and read your content? Do they leave comments on your posts?

iii) Can you improve your navigation? If users can’t easily navigate from any part of your site to the homepage, you have some work to do. Look for sites with excellent navigation – one good example is Mindtools.com.

The moment that your site’s navigation is enhanced, it’ll begin to improve your organic rankings and traffic. Make all your navigation links clickable and ensure that your search is working perfectly.

iv) Do users come back to visit your site? As we’ve already discussed, Google is concerned not only about your current visitors, but also about your repeat visitors.

When your content is top-notch, people will come back for more. If it’s not, then they’ll bounce, resulting in a low quality score for your site.

v). How quickly do your web pages load? If your site load time is less than 4 seconds, then you’re good to go. If not, then you have some room for improvement. Look for ways to make your site load faster through optimization.

How do you find out the speed of your site?

Step #1: Go to tools.pingdom.com. Plug in your site URL and click the “Test Now” button.

Step #2: Check your site speed.

Google Penguin Update

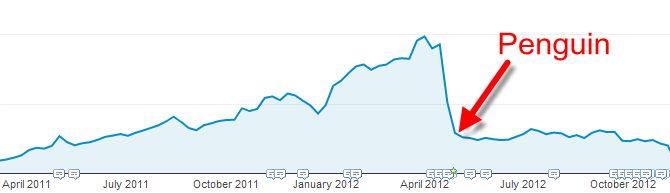

On April 24, 2014, Google released the first Penguin update. While the Panda update was primarily targeted at thin and low-quality content, Google Penguin is a set of algorithm updates that puts more focus on incoming links.

Before Penguin’s release, site owners, content marketers and webmasters all employed different tactics for link building herunterladen.

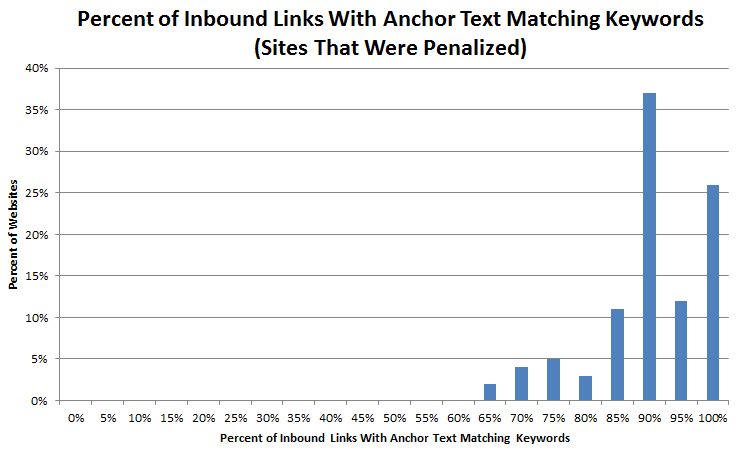

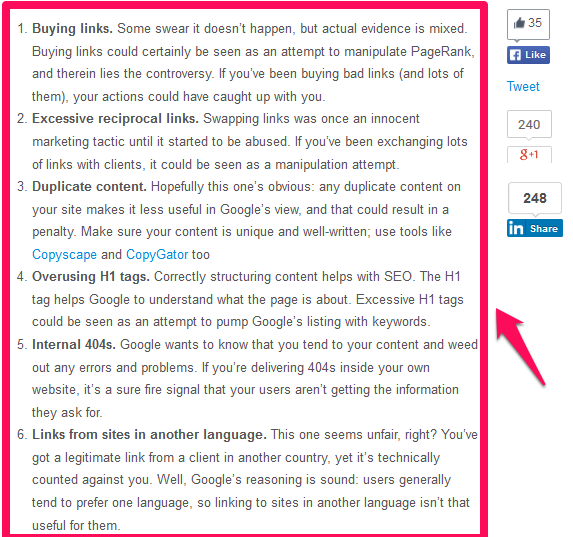

A handful of those ways still work, but a majority of the old-fashioned link building strategies are dead. According to Rival IQ, there are four factors will get your site penalized by Penguin. See the image below:

i). Link schemes – Links are still important, but high quality sites are the best way to improve search rankings.

Link schemes are those types of activities geared at generating links that will manipulate or induce search engines to rank your web pages. If you fall into the trap of always building links to your site from every other site, you may be penalized by Penguin.

Rap Genius, a dedicated website that interprets lyrics and poetry, was penalized, because Google found they were using link schemes to manipulate their rankings at the time.

Bottom line: Avoid all forms of link scheming. It’s just not worth the risk.

ii). Keyword stuffing – Matt Cutts already warned against stuffing your page with relevant keywords. No matter how in-depth and easy-to-navigate the site is, Penguin will most likely find and penalize it. In most cases, it’s easy to see why, especially if you’ve ever actually seen a keyword-stuffed page. Here’s an example:

Buying valentine’s gift for your spouse is a great step to take. This year, valentine’s giftshould be an avenue to express how much love you’ve for him or her. Make sure thevalentine’s gift is well-researched. But don’t stop there. Make it a culture to alwaysshow love to your spouse, whether there is valentine celebration, christmas etc. When you show love, you get love. For instance, when you show love today, you’ll live to be loved. Are you ready to choose the best valentine’s gift?

Do you see how many times the keyword “valentine’s gift” is mentioned in this thin piece of content? That’s keyword stuffing and it’s contrary to the Google Webmaster Guidelines.

Don’t use excessive keywords in your content. Don’t try to manipulate your rankings. If a particular keyword doesn’t sound good or doesn’t flow smoothly in the content, don’t use it.

Note: Keywords are still relevant in the post-Panda and post-Penguin era. Just keep your focus on the intent of your keywords, and write content that appeals to people’s emotions and solves their problems. Effective SEO has always been that way. Let’s keep it simple.

iii). Over-optimization – According to KISSmetrics, “SEO is awesome, but too much SEO can cause over-optimization.” If you over-optimize your anchor texts, for example, this could get you penalized by Penguin. The best approach is to incorporate social media marketing and gain natural or organic links to your web pages.

In April 2012, Google rolled out another update that penalized large sites that were over-optimizing keywords and anchor texts, engaging in link building schemes and pursuing other forms of link manipulation.

One of the signs that you might be over-optimizing is having keyword-rich anchor texts for internal links, i.e., anchor text that links to a page within your own site.

Here’s an example:

Learn more about Hp Pavilion 15 laptops and its features.

(Links to: example.com/hp-pavilion-15-laptops.htm)

Another example:

Do you know the best iPhone case that’s hand-crafted for you?

(Links to: example.com/best-iphone case-hand-crafted)

Note: When your anchor text links directly to a page with an exact destination URL, it can create good SEO. When it becomes too much, however, your site can be penalized for over-optimization.

iv) Unnatural links – The funny thing about unnatural links is that they don’t look good to anyone – not to your readers and not to Google. These links may appear on sites that are totally off-topic. Cardstore lost their ranking through unnatural links that appeared in article directories.

Yes, such links worked in the past and larger sites were the best players of that game. Google Penguin destroyed the playing field for those big sites, which then lost all of the benefits of their hard work. The moral of the story: Your links should be natural.

When you buy or trade links with someone, there is every tendency that the anchor texts or links will be totally irrelevant. Here’s another object lesson: Overstock.complummeted in rankings for product searches, when Google discovered that the site exchanged discounts for .EDU links.

I don’t recommend link buying. But, if you must do it, make sure that the referring site is relevant and authoritative and that the links are natural. Here’s a better explanation from Search Engine Land:

How Penguin works – The Penguin algorithm is a search filter that depends on Google’s frequent algorithm updates and attempts to penalize link spam and unnatural links.

The Penguin code simply looks for aggressive link building practices aimed at manipulating the search engine rankings amazon gekauftes video herunterladen.

For example, if you’re building backlinks too fast for a new site, Google can easily detect that you’re aggressive and penalize your site or even delete it from their search index altogether.

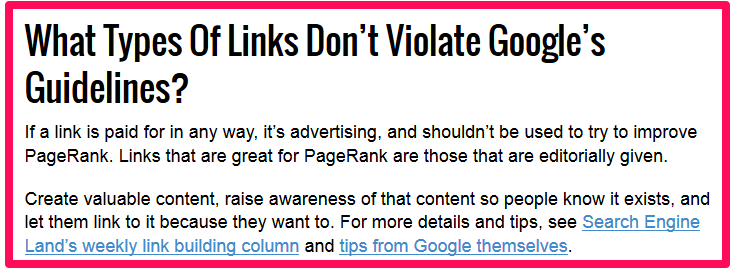

Remember, any link that you build now or in the future with the intention of boosting your search engine rankings violates Google’s Webmaster Guidelines.

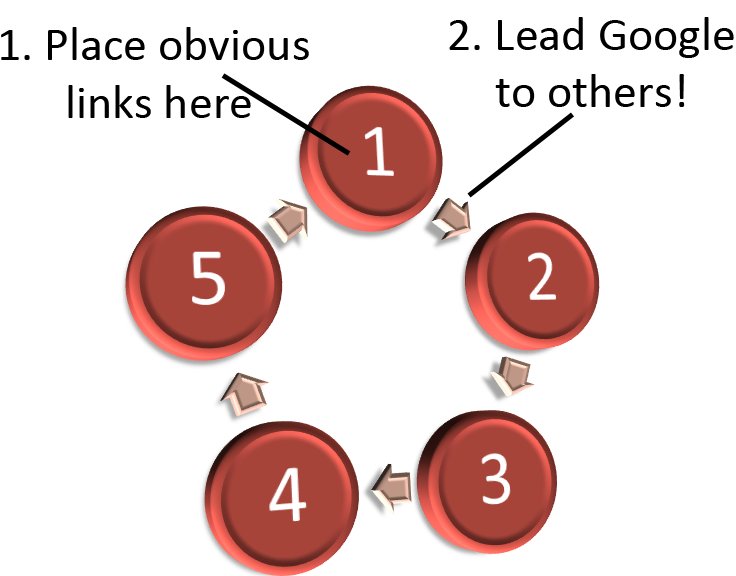

Link exchanges, paid links and other forms of black-hat link building techniques are all bad SEO practices. They may work for a time, but sooner or later, Google will find out. More importantly, avoid using link wheels or exchanges to manipulate search rankings.

The correlation between Panda & Penguin – When you pay attention to making your thin pages and low-quality content better, you’re building a site to which other people will naturally link. And, that’s the relationship between Panda and Penguin updates.

Even if your webpage contains unique, useful and in-depth content, you’ll still face a Penguin penalty if your links are low-quality.

What’s the difference between Panda and Penguin? The Panda update is primarily concerned about quality content, while Penguin wants to drown spammy or aggressive links that strive to manipulate search engine rankings.

It’s important to keep an eye on both updates. When your site is penalized by Panda, there’s a good chance that Penguin will affect your site, too. Some SEOs and site owners have experienced multiple penalties, all the while wondering what happened to their rankings.

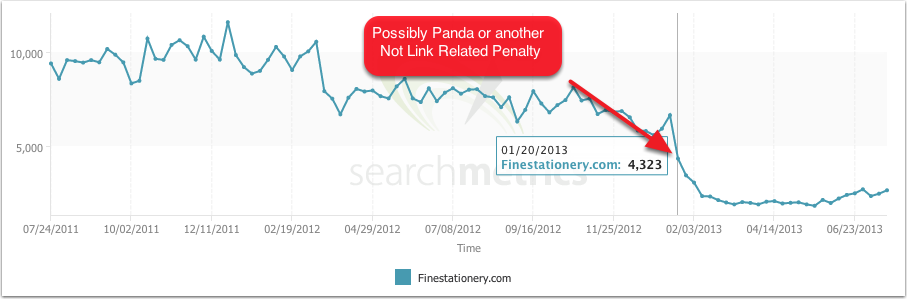

A good example of this interplay between Panda and Penguin is what happened to Finestationery.com. When the site began to drop in organic rankings, it wasn’t clear precisely what was happening. Was the site being penalized by Panda or Penguin?

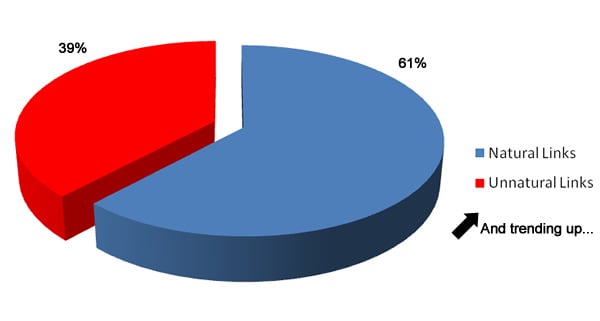

How to avoid a Penguin penalty – If you don’t want to get a Penguin penalty, then position your blog to earn natural links. Search Engine Watch shared an instructive case study of one site where they uncovered a mix of 61% natural links and 39% unnatural links and explained what steps they took to improve the site.

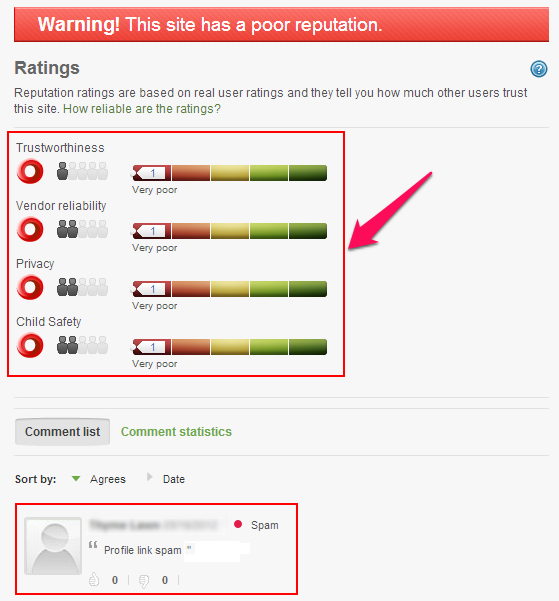

You can use Web of Trust (WOT) to gauge how much your visitors trust your site. If your WOT score is poor, then you have a bit more work to do, which still boils down to producing great content and building social engagement.

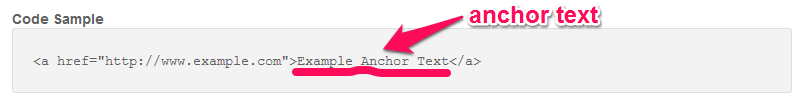

Understanding anchor text: Anchor text is simply the clickable text in your link. The hyperlink itself is masked or hidden. You can’t see the link’s destination URL until it’s clicked or hovered over, but the anchor text is visible on the page.

As it turns out, excessive use of exact keywords in your anchor texts can trigger a Penguin penalty. This was a stunning realization for many SEOs. For quite some time, SEOs had focused on creating anchor texts that precisely matched targeted keywords, in an effort to help build links.

After Penguin was released, many site owners experienced a huge drop in organic traffic and rankings. The reason is simple: excessive or “over”-use of precise keyword-matching anchor text.

Indeed, anchor text plays a vital role in the Penguin update. This is why it’s important to build the right kinds of links, using relevant and generic words, in order to reduce the risk of a Google penalty.

Other types of link and content manipulation targeted by the Penguin update can also get you penalized by Google:

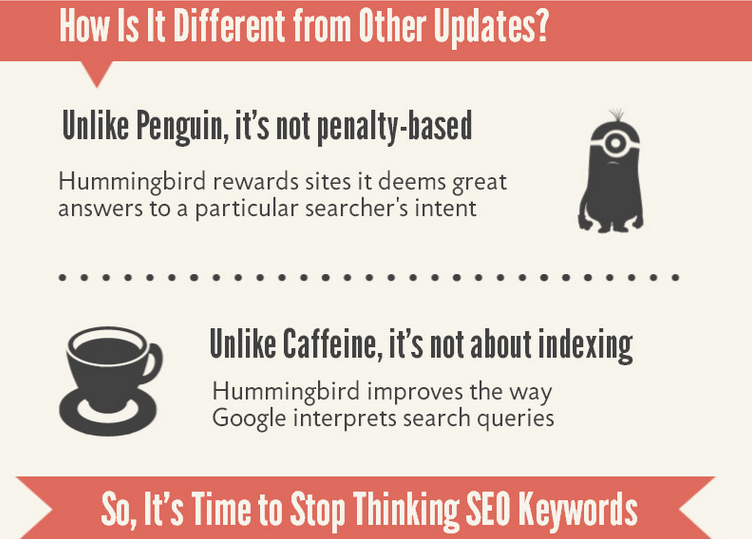

Google Hummingbird Changes

On September 26, 2013, Google released one of the most significant enhancements to the search engine algorithm to date. Hummingbird gives Google a “precise and fast” platform where search users can easily find what they’re looking for when they type a given keyword in the search engine.

In other words, this update is designed to improve its delivery of results for the specified keyword – and not just the exact keyword itself, but what we call the “keyword’s intent.” In a sense, Panda and Penguin were ongoing updates to the existing algorithm, whereas Hummingbird is a new algorithm.

This new algorithm makes use of over 200 ranking factors to determine the relevance and quality score of a particular site. Hummingbird serves as a sort of dividing line distinguishing the old SEO from the new.

Now the focus is on the users, not the keywords. Of course, keyword research will continue to be relevant, especially when you want to explore a new market.

But, when it’s time to produce content that will truly solve problems for people, you should focus on answering questions. In today’s SEO, start with the user, execute with quality content and then measure the impact of your webpage links.

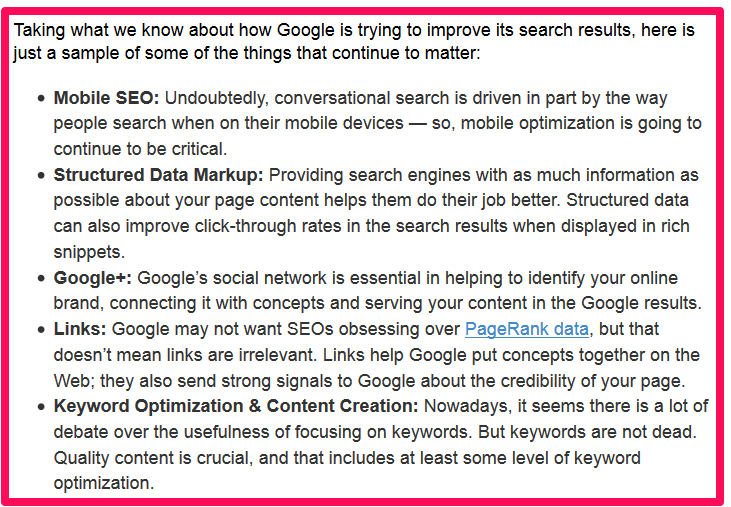

Jim Yu, CEO and founder of BrightEdge, explains some of the elements that still matter when you’re doing SEO in the Hummingbird era. Yu still believes that keyword research will continue to occupy the seat of power in SEO, but it should be done in service of the quality of your content 80 tage um die welt spiel kostenlos downloaden.

Note: Hummingbird uses long-tail key phrases, rather than seed/head keywords. Sites that use long-tail keywords have experienced a lot of success. 91% of my search traffic comes from long-tail keywords.

Marcus Sheridan has used and continues to use long-tail keywords to drive organic visitors to his River Pools Company Blog.

If you want to learn more details about Hummingbird and how it has affected SEOsince its 2013 release, the infographic from Search Engine Journal excerpted below will help:

Click Here To View the Full Infographic

Elements of Hummingbird: Since Hummingbird is not just an algorithm update, like Panda and Penguin, but rather a total change aimed at serving better search results to users, you should be aware of some of its more important elements. For all of these elements to come together and work properly, you must understand your audience.

Conversational Search

Conversational search is the core element of Hummingbird’s algorithm change.

No matter what your niche may be, there are conversational keywords that will enable you create high valuable content. These days, people search the web in a conversational way. Forty-four percent of marketers aim for keyword rankings. But, there is more to SEO than keywords.

Google pulls data from their Knowledge Graph, along with social signals, to understand the meaning of words on a webpage.

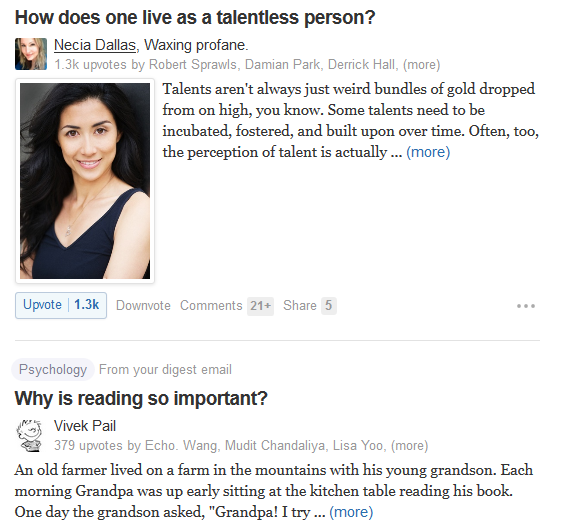

Why is Quora such a popular site? There are probably many reasons, but it’s due in part to one simple fact: Quora offers experts from diverse fields who willingly answer questions in a conversational way.

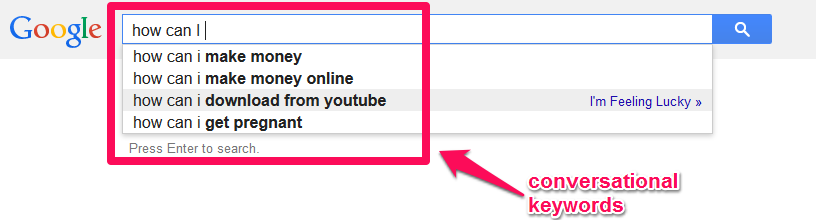

Site owners and content writers need to align their keywords and content, in order to best match the way people talk and search for information. Conversational keywords are question-based keywords. You’ll come across them when you carry out a search.

Your landing page should be able to answer the question that prompted the query in the first place. As an example, let’s say someone is searching for “best arthritis care in NJ.” Your content page should have that information for the searcher and not redirect them to an arthritis care site in Los Angeles.

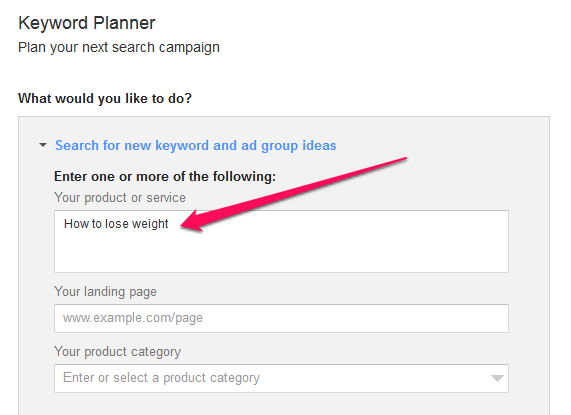

So, how do you find conversational keywords, a.k.a. question-based & long-tail keywords?

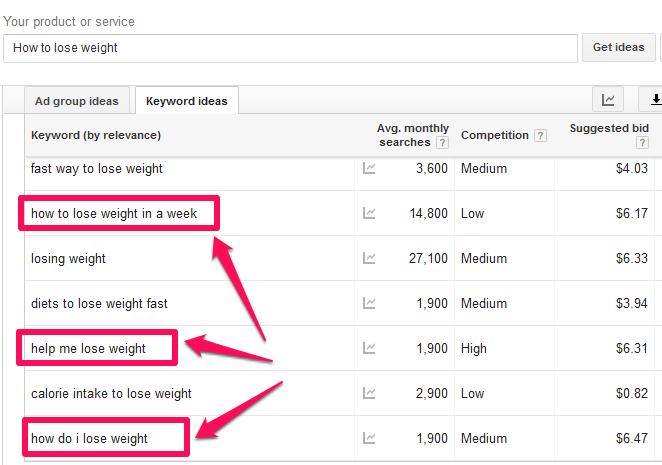

Step #1: Launch your Google Keyword Planner. Plug in a relevant “how to” keyword, e.g., “how to lose weight.”

Step #2: Identify your conversational long-tail keywords.

From the screenshot above, you can see that searchers are seeking information on several conversational keywords. They want answers from you. It’s your job to provide those answers in a conversational way.

“How to” key phrases and content, in particular, will better answer your users’ questions, which will prompt more engagement. In our example, you could write a weight-loss case study that’s useful, interactive and in-depth.

Around 87% of my blog posts are “how to” tutorials and that has been part of the reason for my success.

Remember: Post-Hummingbird, the users are the key focus.

Copyblogger also understands how to please their audience. Their blog post titles are magnetic and conversational in nature. Additionally, they understand their competitors, have a mobile responsive site and use social media to gain signals. All of these factors make Google delighted to send Copyblogger more traffic.

Content is the new ad. Traditional advertisements tend to interrupt users, but useful and interesting content will lure them in and make them repeat customers.

That’s the whole essence of content marketing – and it’s been my secret weapon for growing my software companies. You may not fully grasp Google’s policies, but follow Jenna Mills’ advice and your site will not only avoid Google penalties, but will also enjoy improved organic rankings and traffic.

Google Pigeon Update

So far, we’ve talked about Panda, Penguin and Hummingbird and how these Google algorithm updates affect site owners who want to improve search engine rankings without getting penalized.

However, there are other algorithm updates and changes that have taken place since the 2011 release of the first Panda. Specifically, in July 2014, there was the Pigeon update. For more information about Pigeon, see this post I wrote previously on everything you need to know about the Pigeon update.

How big an impact will Pigeon have on SEO and marketing? According to Search Engine Land, 58% of local marketers will most definitely change tactics after the Pigeon update.

Conclusion

I hope this Google Algorithm article has helped you to fully understand the major Google updates: Panda, Penguin, Hummingbird and Pigeon. These algorithm updates and changes have revolutionized SEO.

Bottom line: All you need to do is to build links in a scalable, organic way and focus on providing the best quality content (be it written blog posts or creative infographics) that you can, to grow your blog’s traffic minecraft on pc for free.

What effects of these Google algorithm updates have you noticed on your site?